Multi-agent visual SLAM

Problem statement

With the ever-growing abundance of cameras in cars, smartphones and other mobile computing platforms, there has been a lot of progress in the visual flavor of Simultaneous Localization And Mapping (SLAM) [Engel et al. 2015, Mur-Artal et al. 2016]. However, these systems are often designed for single-agent scenarios. For Belgian Defence and partner institutions, extending these systems to allow multi-agent operation with limited computational power would bring a lot of value. Be it for tactical or firefighting operations, knowing one’s location and building (sparse) maps on the fly would bring a significant improvement to situational awareness. An additional challenge is that such systems must be wearable and operated in a hands-free manner. As such, cameras will be mounted on headsets and computing platforms will be limited to smartphones or low-power embedded systems such as the Nvidia Jetson Nano.

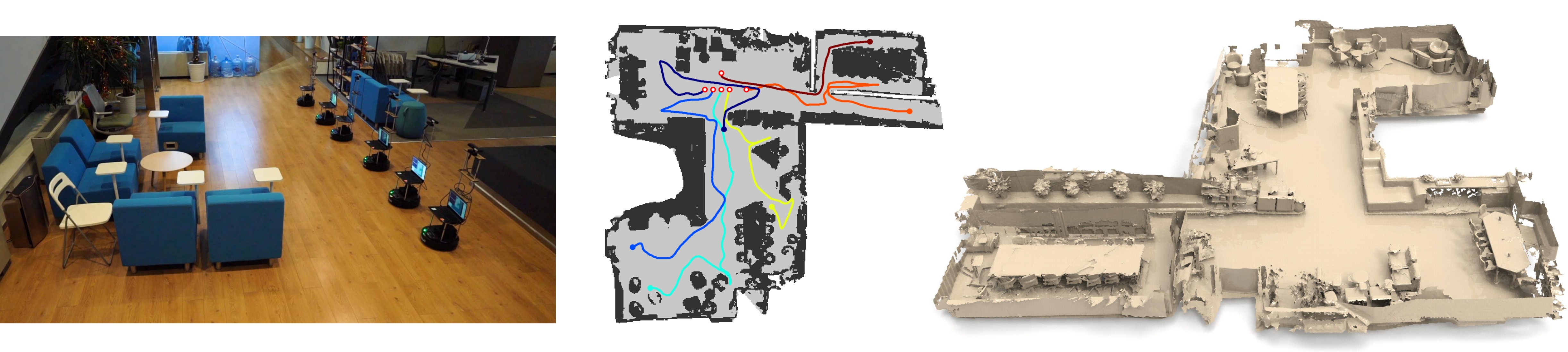

Figure 1: The multi-agent SLAM system from [3]. Several rovers collaborate to build a single 3D map of the environment.

Figure 1: The multi-agent SLAM system from [3]. Several rovers collaborate to build a single 3D map of the environment.

Goal

The goals of this master thesis are the following: an existing visual SLAM system using mono or stereo video input [Engel et al. 2015, Mur-Artal et al. 2016] will be extended to allow running with multiple agents with limited computing power. The purpose is 1) to develop an efficient algorithm for multi-agent SLAM, 2) to devise optimal trajectories for mapping in unknown places while maintaining coherent relative location and 3) deal with low bandwidth communication channels (simulated over WiFi) to exchange compressed partial maps or location information.

This thesis has been conducted by Robbe Adriaens (Robbe.Adriaens@UGent.be) and is in collaboration with the Royal Military Academy (RMA).

References

[1] J. Engel, J. Stückler and D. Cremers, “Large-scale direct SLAM with stereo cameras,” 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, 2015, pp. 1935-1942.

[2] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras,” in IEEE Transactions on Robotics, vol. 33, no. 5, pp. 1255-1262, Oct. 2017.

[3] S. Dong, K. Xu, Q. Zhou, A. Tagliasacchi, S. Xin, M. Nießner, and B. Chen, “Multi-robot collaborative dense scene reconstruction”, ACM Trans. Graph. 38, 4, Article 84, July 2019.